728x90

반응형

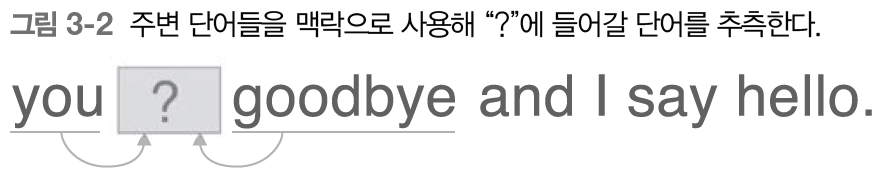

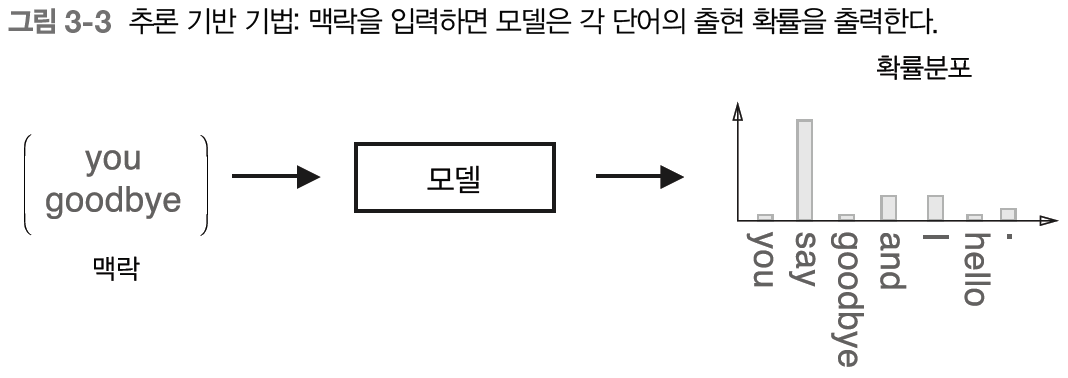

- 추론 기반의 기법 ⇒ 단어를 벡터로 변환시켜서 학습하는 것

- 이 또한 분포가설을 기반으로(단어의 의미는 주변 단어에 의해 형성된다)

통계 기반 기법의 문제점

- 통계 기반의 경우, 모든 데이터를 한번에 학습시키게 됨.

- 따라서 시간이 굉장히 오래 걸림

- 이에 따라 word2vec(추론기반기법)의 경우, 미니배치를 활용해 학습함

추론 기반 기법

word2vec

- CBOW 모델

- skip- gram 모델

- 추론기반 기법

- 단순 2층 신경망

- 가중치 재학습이 가능. 따라서 추가된 단어를 효율적으로 학습 가능

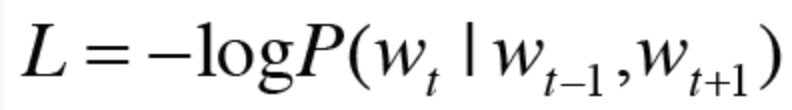

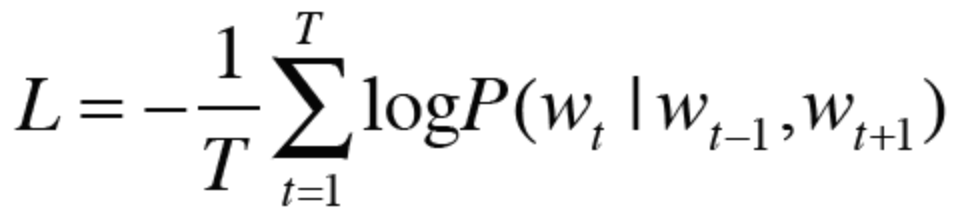

CBOW 모델

모델의 학습

def preprocess(text): text = text.lower() #소문자로 text = text.replace('.', ' .') #특수문자 분리? words = text.split(' ') #띄어쓰기로 끊기 #단어 id 변환 word_to_id = {} id_to_word = {} for word in words: if word not in word_to_id: new_id = len(word_to_id) word_to_id[word] = new_id id_to_word[new_id] = word corpus = np.array([word_to_id[w] for w in words]) return corpus, word_to_id, id_to_wordtext = 'You say goodbye and I say hello' corpus, word_to_id, id_to_word = preprocess(text) print(corpus) print(id_to_word)

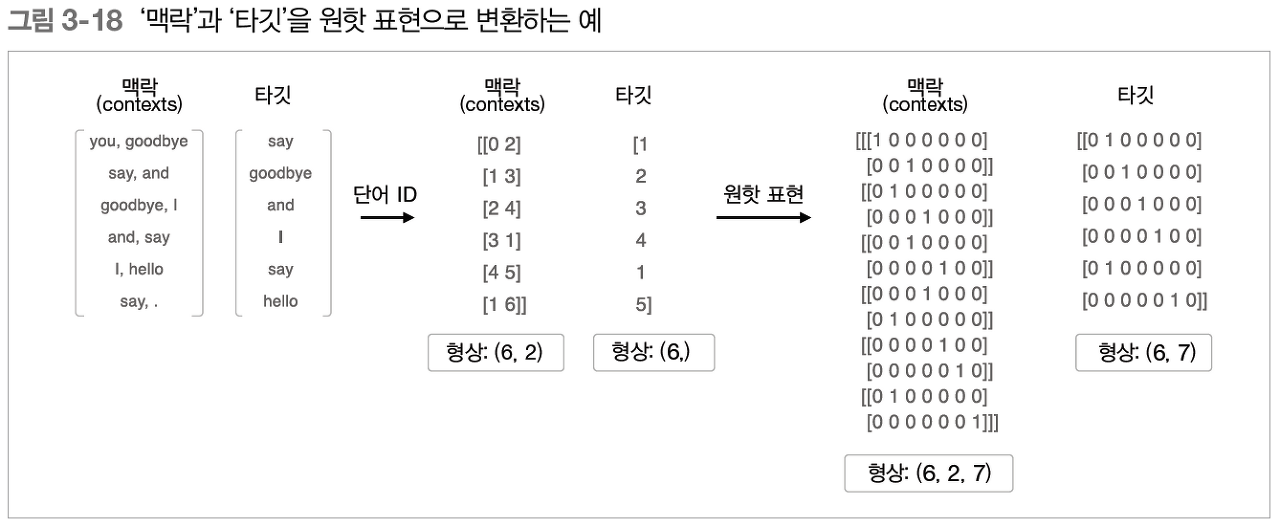

def create_contexts_target(corpus, window_size=1): target = corpus[window_size:-window_size] contexts = [] for idx in range(window_size, len(corpus)-window_size): cs = [] for t in range(-window_size, window_size + 1): if t == 0: continue cs.append(corpus[idx + t]) contexts.append(cs) return np.array(contexts), np.array(target)contexts, target = create_contexts_target(corpus, window_size= 1) print(contexts) print(target)

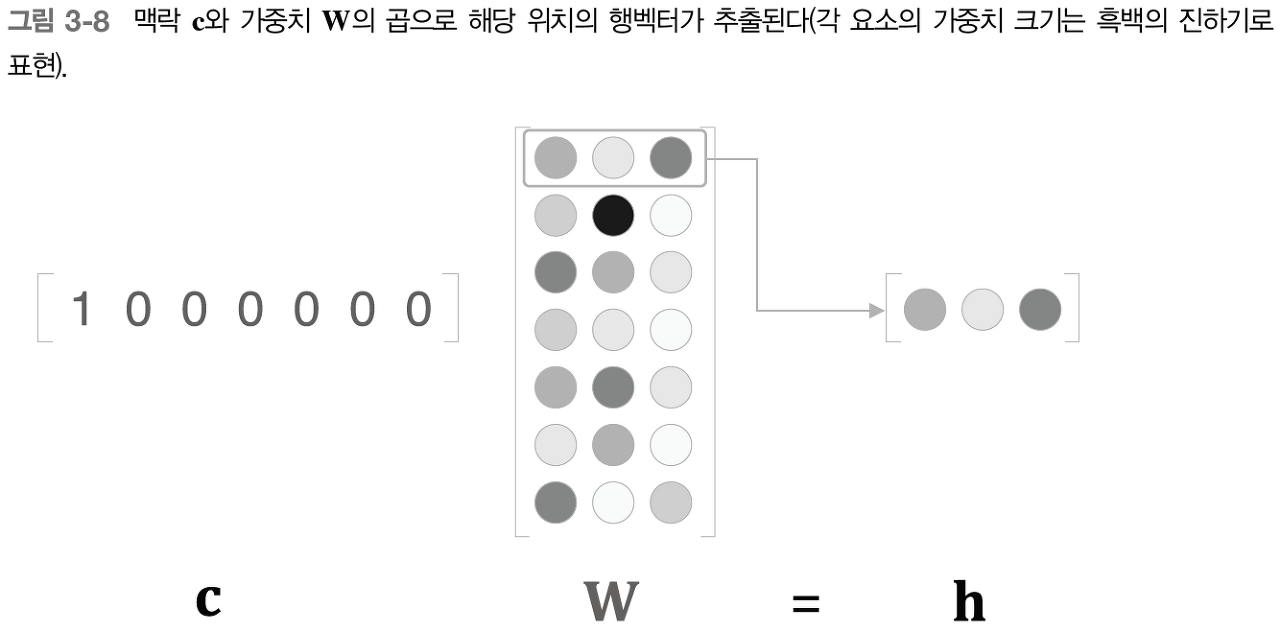

def convert_one_hot(corpus, vocab_size): N = corpus.shape[0] if corpus.ndim == 1: one_hot = np.zeros((N, vocab_size), dtype=np.int32) for idx, word_id in enumerate(corpus): one_hot[idx, word_id] = 1 elif corpus.ndim == 2: C = corpus.shape[1] one_hot = np.zeros((N, C, vocab_size), dtype=np.int32) for idx_0, word_ids in enumerate(corpus): for idx_1, word_id in enumerate(word_ids): one_hot[idx_0, idx_1, word_id] = 1 return one_hottext = "You say goodbye and I say hello" corpus,word_to_id, id_to_word = preprocess(text) contexts, target = create_contexts_target(corpus, window_size=1) vocab_size = len(word_to_id) target = convert_one_hot(target, vocab_size) contexts = convert_one_hot(contexts,vocab_size)class SimpleCBOW: def __init__(self, vocab_size, hidden_size): V, H = vocab_size, hidden_size # 가중치 초기화 W_in = 0.01 * np.random.randn(V, H).astype('f') W_out = 0.01 * np.random.randn(H, V).astype('f') # 계층 생성 self.in_layer0 = MatMul(W_in) self.in_layer1 = MatMul(W_in) self.out_layer = MatMul(W_out) self.loss_layer = SoftmaxWithLoss() # 모든 가중치와 기울기를 리스트에 모은다. layers = [self.in_layer0, self.in_layer1, self.out_layer] self.params, self.grads = [], [] for layer in layers: self.params += layer.params self.grads += layer.grads # 인스턴스 변수에 단어의 분산 표현을 저장한다. self.word_vecs = W_in인수로는 어휘 수(vocab_size)와 은닉층의 뉴런 수(hidden_size)를 받는다.

가중치는 2개(W_in, W_out)를 생성하고 무작위 값으로 초기화한다.

필요한 계층(입력측2개, 출력측 1개)을 생성한다.

Softmax with Loss 계층을 생성한다.

입력 측의 맥락을 처리하는 MatMul계층은 맥락에서 사용하는 단어의 수만큼 만들고 이들은 모두 같은 가중치를 이용.

신경망에서 사용되는 매개변수와 기울기를 params, grads리스트에 각각 모아둔다.

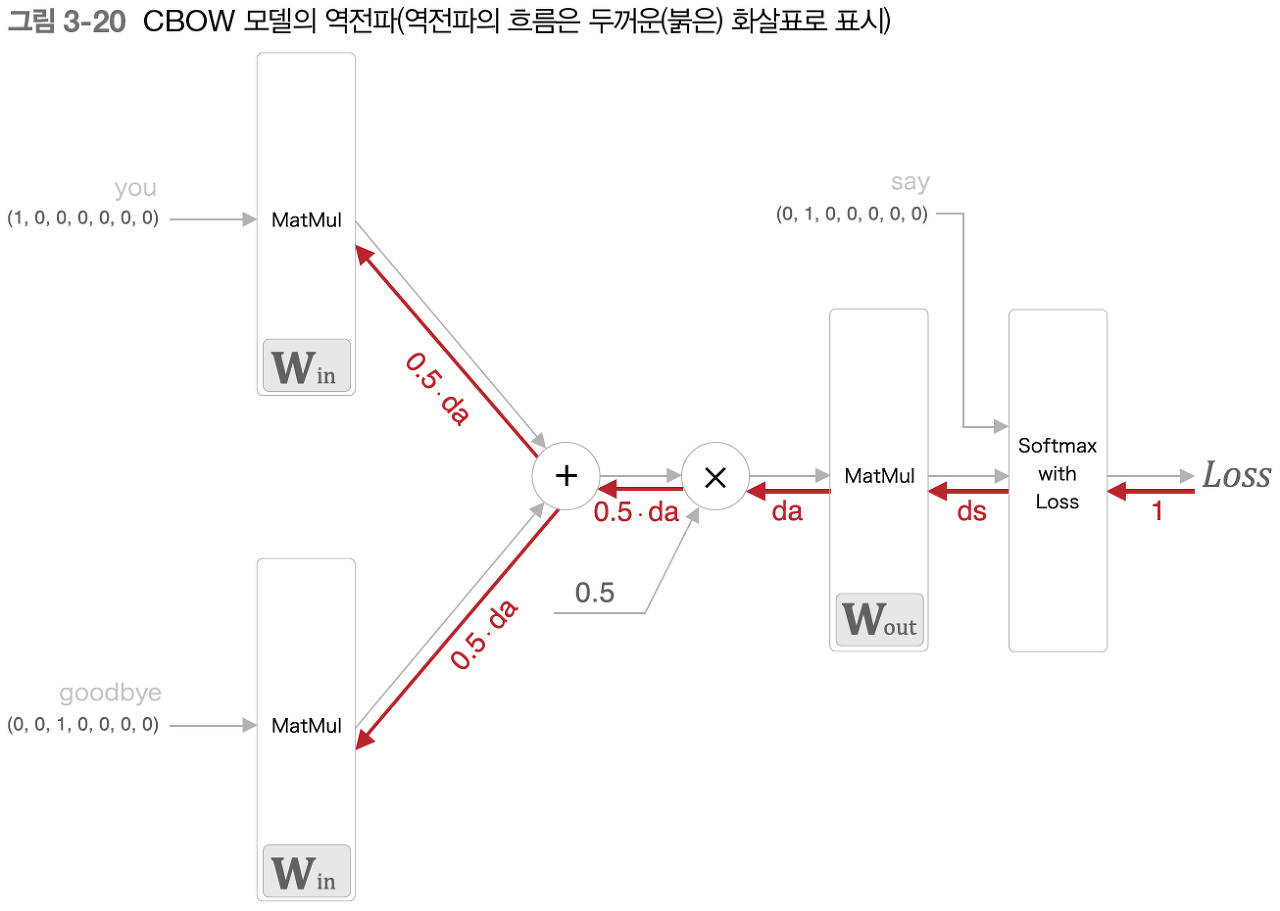

신경망의 순전파인 forward()메서드를 구현한다. 인수로 맥락과 타깃을 받아 손실을 반환한다.

def forward(self, contexts, target): h0 = self.in_layer0.forward(contexts[:, 0]) h1 = self.in_layer1.forward(contexts[:, 1]) h = (h0 + h1) * 0.5 score = self.out_layer.forward(h) loss = self.loss_layer.forward(score, target) return losscontexts는 위 예에서 (6,2,7)이 되는데

0번째 차원의 원소 수는 미니배치의 수,

1번째 차원의 원소 수는 맥락 윈도우 크기

2번째 차원은 원 핫 벡터

target은 위 예에서 (6,7)과 같은 형상이 된다.

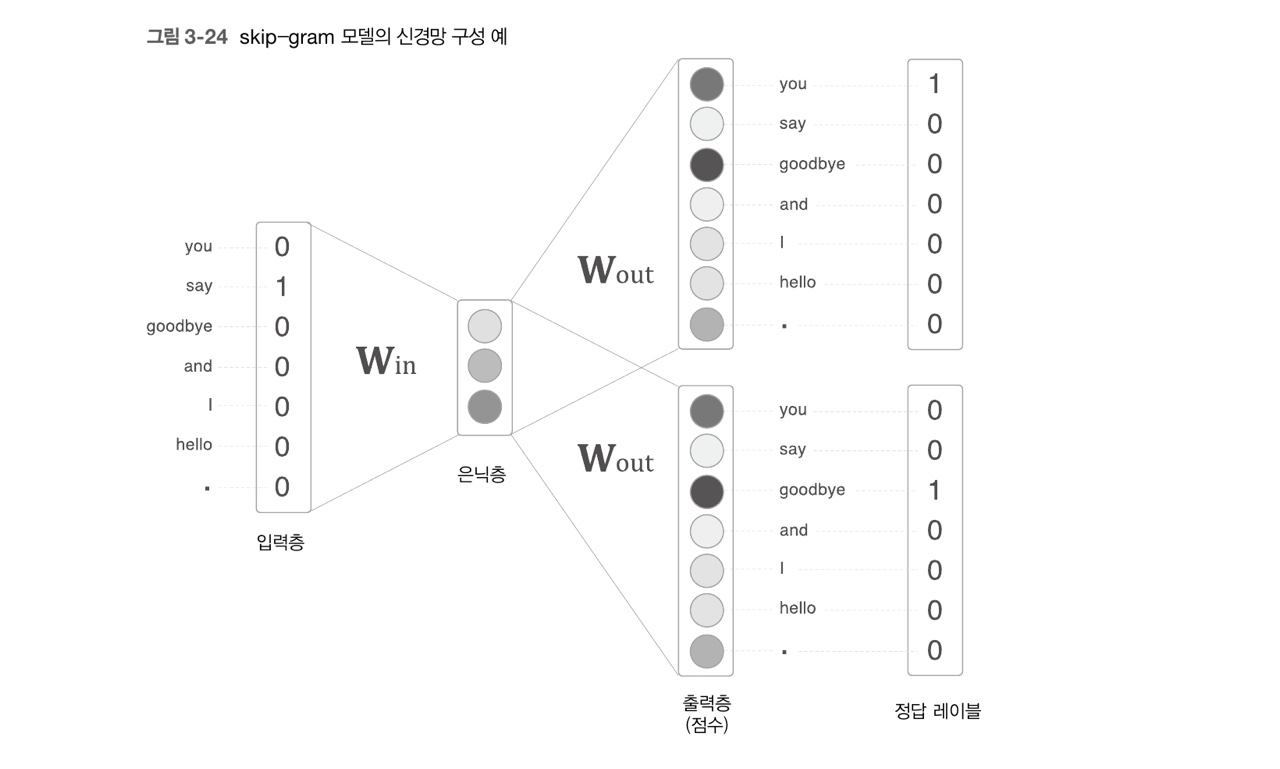

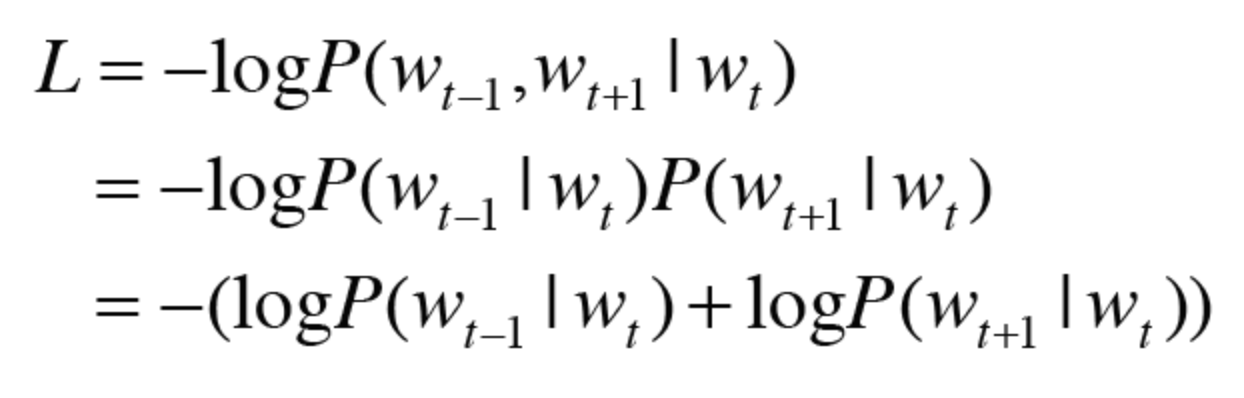

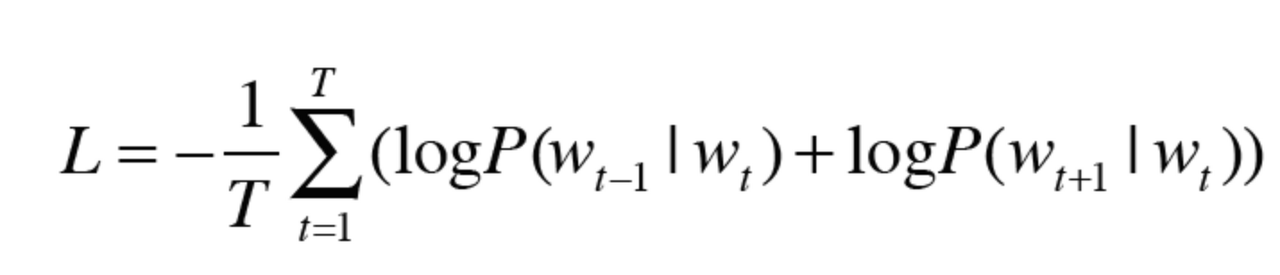

def backward(self, dout=1): ds = self.loss_layer.backward(dout) da = self.out_layer.backward(ds) da *= 0.5 self.in_layer1.backward(da) self.in_layer0.backward(da) return Noneskip- gram 모델

728x90

반응형

'Deep Learning > 2023 DL 기초 이론 공부' 카테고리의 다른 글

| [밑바닥부터 시작하는 딥러닝 2] chap1(신경망 복습) (0) | 2023.07.09 |

|---|---|

| [밑바닥부터 시작하는 딥러닝 2] chap2(자연어) (0) | 2023.07.09 |

| [밑바닥부터 시작하는 딥러닝 2] chap4(word2vec 속도 개선) (0) | 2023.07.09 |

| [밑바닥부터 시작하는 딥러닝 2] chap5(순환 신경망 RNN) (0) | 2023.07.09 |

| [밑바닥부터 시작하는 딥러닝 2] chap6(게이트가 추가된 RNN) (0) | 2023.07.09 |