0. Abstract

본문

We propose a new framework for estimating generative models via an adversarial process, in which we simultaneously train two models: a generative model G that captures the data distribution, and a discriminative model D that estimates the probability that a sample came from the training data rather than G. The training procedure for G is to maximize the probability of D making a mistake. This framework corresponds to a minimax two-player game. In the space of arbitrary functions G and D, a unique solution exists, with G recovering the training data distribution and D equal to 1 2 everywhere. In the case where G and D are defined by multilayer perceptrons, the entire system can be trained with backpropagation. There is no need for any Markov chains or unrolled approximate inference networks during either training or generation of samples. Experiments demonstrate the potential of the framework through qualitative and quantitative evaluation of the generated samples.

저희는 새로운 generative model을 추정하기 위한 프레임워크를 제안합니다. 이 프레임워크에서는 두 개의 모델을 동시에 학습시킵니다. 하나는 데이터 분포를 잡아내는 generative model G이고, 다른 하나는 훈련 데이터로부터 온 샘플인지 G로부터 온 샘플인지를 확률적으로 추정하는 discriminative model D입니다. G의 학습 과정은 D가 실수를 저지르는 확률을 최대화하는 것입니다. 이 프레임워크는 minimax two-player game에 해당합니다. 임의의 함수 G와 D에 대해서, G가 훈련 데이터 분포를 복원하고 D가 모든 곳에서 1/2의 값을 가지는 유일한 해법이 존재합니다. Multilayer perceptron으로 G와 D를 정의한 경우, 전체 시스템은 backpropagation을 사용하여 학습할 수 있습니다. Markov chain이나 펼쳐진 근사 추론 네트워크는 훈련 또는 샘플 생성 과정에서 필요하지 않습니다. 실험은 생성된 샘플의 질적 및 양적 평가를 통해 이 프레임워크의 잠재력을 보여줍니다.

- 경쟁하는 과정을 통해 generative model을 추정하는 프레임 워크를 제안함

- 2개의 모델을 학습시킴 (G vs D)

- Generative model (생성 모델) G

- training data의 분포를 모사함 (fake data 생성)

- discriminative model이 구별하지 못하도록 함 (진짜와 가짜를 판별하는 모델)

- Discriminative model (판별 모델) D

- G가 만든 (fake data)가 아닌, 실제 training data로부터 나온 데이터일 확률을 추정함

※ G의 학습 과정은 판별모델(D)가 G로부터 나온(fake data) vs training (real data) 를 판별하는데 실수할 확률을 최대화 시키는 것.

즉, 다시 말해 G는 D가 실제데이터와 fake data를 서로 구별하지 못하도록 속이는 것이 G의 목표

⇒ 논문에서는 이를 minimax two-layer game이라고 표현함

⇒ G, D의 공간에서 G가 training 데이터 분포를 모사하게 되면서, D가 실제 training 데이터인지, fake data인지 판별하는 확률은 1/2가 될 것임

⇒ 즉, 두 데이터를 판별하는 것이 어려워진다는 말

- G와 D가 multi-layer perceptrons으로 정의된 경우, 전체 시스템은 back-propagation을 통해 학습됨

1. Intro/Related work

본문

The promise of deep learning is to discover rich, hierarchical models [2] that represent probability distributions over the kinds of data encountered in artificial intelligence applications, such as natural images, audio waveforms containing speech, and symbols in natural language corpora. So far, the most striking successes in deep learning have involved discriminative models, usually those that map a high-dimensional, rich sensory input to a class label [14, 22]. These striking successes have primarily been based on the backpropagation and dropout algorithms, using piecewise linear units [19, 9, 10] which have a particularly well-behaved gradient . Deep generative models have had less of an impact, due to the difficulty of approximating many intractable probabilistic computations that arise in maximum likelihood estimation and related strategies, and due to difficulty of leveraging the benefits of piecewise linear units in the generative context. We propose a new generative model estimation procedure that sidesteps these difficulties. 1

심층 학습의 잠재력은 인공지능 응용 프로그램에서 만나게 되는 자연 이미지, 음성을 포함한 오디오 웨이브폼, 자연 언어 말뭉치의 기호 등과 같은 데이터 종류에 대한 확률 분포를 표현하는 풍부하고 계층적인 모델을 발견하는 것입니다. 지금까지 심층 학습의 가장 두드러진 성공은 주로 고차원의 풍부한 감각적 입력을 클래스 레이블에 매핑하는 판별 모델에 관한 것이었습니다. 이러한 두드러진 성공은 주로 역전파와 드롭아웃 알고리즘을 기반으로 하였으며, 특히 잘 동작하는 기울기를 가진 조각별 선형 유닛을 사용했습니다. 심층 생성 모델은 다양한 악명높은 확률 계산의 근사화와 최대 우도 추정 및 관련 전략에서 발생하는 어려움, 그리고 생성적인 맥락에서 조각별 선형 유닛의 이점을 활용하기 어려운 점으로 인해 그 영향력이 적었습니다. 저희는 이러한 어려움을 우회하는 새로운 생성 모델 추정 절차를 제안합니다.

In the proposed adversarial nets framework, the generative model is pitted against an adversary: a discriminative model that learns to determine whether a sample is from the model distribution or the data distribution. The generative model can be thought of as analogous to a team of counterfeiters, trying to produce fake currency and use it without detection, while the discriminative model is analogous to the police, trying to detect the counterfeit currency. Competition in this game drives both teams to improve their methods until the counterfeits are indistiguishable from the genuine articles. 제안된 적대적 신경망(adversarial nets) 프레임워크에서, 생성 모델은 적대적인 상대인 판별 모델과 맞붙게 됩니다. 판별 모델은 샘플이 모델 분포에서 나왔는지 아니면 데이터 분포에서 나왔는지를 판단하는 방법을 학습합니다. 생성 모델은 가짜 통화를 생산하고 감지되지 않고 사용하려는 위조꾼 팀에 유사하게 생각할 수 있으며, 판별 모델은 위조된 통화를 감지하려는 경찰에 유사하게 생각할 수 있습니다. 이 게임에서의 경쟁은 위조품이 진짜 물건과 구별할 수 없는 정도까지 양 팀이 방법을 개선하도록 동력을 부여합니다.

This framework can yield specific training algorithms for many kinds of model and optimization algorithm. In this article, we explore the special case when the generative model generates samples by passing random noise through a multilayer perceptron, and the discriminative model is also a multilayer perceptron. We refer to this special case as adversarial nets. In this case, we can train both models using only the highly successful backpropagation and dropout algorithms [17] and sample from the generative model using only forward propagation. No approximate inference or Markov chains are necessary. 이 프레임워크는 많은 종류의 모델과 최적화 알고리즘에 대한 구체적인 학습 알고리즘을 도출할 수 있습니다. 이 논문에서는 생성 모델이 랜덤 노이즈를 다층 퍼셉트론을 통과시켜 샘플을 생성하고, 판별 모델도 다층 퍼셉트론인 특수한 경우를 탐구합니다. 이 특수한 경우를 적대적 신경망(adversarial nets)이라고 합니다. 이 경우, 우리는 두 모델을 매우 성공적인 역전파(backpropagation)와 드롭아웃(dropout) 알고리즘만을 사용하여 학습할 수 있으며, 생성 모델에서는 단순히 순방향 전파만을 사용하여 샘플을 추출할 수 있습니다. 근사 추론(approximate inference)이나 마르코프 체인(Markov chains)은 필요하지 않습니다.

- adversarial nets 프레임워크에서 generator 모델은 discriminator 모델을 속이도록 세팅되고 discriminator 모델은 샘플이 generator 모델 G가 모델링한 분포에서 나온 것인지 실제 데이터 분포에서 나온것인지 결정하는 법을 학습시킴

- 이러한 경쟁구도는 두 모델이 각각의 목적을 달성시키기 위해 스스로를 개선하도록 함

- ex) 위조지폐범(G)는 경찰(D)을 속이기 위해 위조지폐를 만들고 경찰은 이것을 진짜 지폐는 1, 가짜 지폐는 0으로 판단하여 구분함.

- 위조지폐범도 훈련을 할수록 위조 능력이 높아지고 경찰도 훈련을 할수록 위조지폐의 감별능력이 높아지게 됨 → 모두의 성능을 향상시킴

- G 모델과 D 모델 둘 다 다층 퍼셉트론으로 구성됨 → 복잡한 네트워크 필요없이 순전파 및 역전파 드롭아웃으로 학습이 가능함→ 적대적(adversarial) net이라고 부름

⇒ 결국 GAN의 핵심 컨셉은 각각의 역할을 가진 두 모델을 통해 적대적 학습을 하면서 ‘진짜 같은 가짜’를 생성해내는 능력을 키워주는 것

2. Adversarial nets

본문

An alternative to directed graphical models with latent variables are undirected graphical models with latent variables, such as restricted Boltzmann machines (RBMs) [27, 16], deep Boltzmann machines (DBMs) [26] and their numerous variants. The interactions within such models are represented as the product of unnormalized potential functions, normalized by a global summation/integration over all states of the random variables. This quantity (the partition function) and its gradient are intractable for all but the most trivial instances, although they can be estimated by Markov chain Monte Carlo (MCMC) methods. Mixing poses a significant problem for learning algorithms that rely on MCMC [3, 5]. Deep belief networks (DBNs) [16] are hybrid models containing a single undirected layer and several directed layers. While a fast approximate layer-wise training criterion exists, DBNs incur the computational difficulties associated with both undirected and directed models. 잠재 변수를 포함하는 방향이 있는 그래피컬 모델의 대안으로는 제한된 볼츠만 머신(RBM) [27, 16], 딥 볼츠만 머신(DBM) [26] 및 그 여러 변형과 같은 잠재 변수를 포함하는 무방향 그래피컬 모델이 있습니다. 이러한 모델 내의 상호작용은 확률 변수의 모든 상태에 대한 전역 합산/적분으로 정규화된 비정규화된 포텐셜 함수의 곱으로 표현됩니다. 이런 양(분할 함수)과 그 경사는 가장 단순한 경우를 제외하고는 계산하기 어려우며, 마르코프 체인 몬테카를로(MCMC) 방법을 사용하여 추정할 수 있습니다. MCMC에 의존하는 학습 알고리즘에서는 혼합(mixing)이 중요한 문제가 됩니다 [3, 5]. 심층 신뢰 네트워크(DBN) [16]은 하나의 무방향 레이어와 여러 개의 방향 레이어로 구성된 하이브리드 모델입니다. 빠른 근사적인 레이어별 학습 기준이 존재하지만, DBN은 무방향 모델과 방향 모델 모두에 관련된 계산적인 어려움을 겪습니다. Alternative criteria that do not approximate or bound the log-likelihood have also been proposed, such as score matching [18] and noise-contrastive estimation (NCE) [13]. Both of these require the learned probability density to be analytically specified up to a normalization constant. Note that in many interesting generative models with several layers of latent variables (such as DBNs and DBMs), it is not even possible to derive a tractable unnormalized probability density. Some models such as denoising auto-encoders [30] and contractive autoencoders have learning rules very similar to score matching applied to RBMs. In NCE, as in this work, a discriminative training criterion is employed to fit a generative model. However, rather than fitting a separate discriminative model, the generative model itself is used to discriminate generated data from samples a fixed noise distribution. Because NCE uses a fixed noise distribution, learning slows dramatically after the model has learned even an approximately correct distribution over a small subset of the observed variables. 로그 우도를 근사하거나 제한하는 대신에 다른 대안적인 기준들이 제안되었습니다. 예를 들면, score matching [18] 및 noise-contrastive estimation (NCE) [13]가 있습니다. 이 두 가지 방법은 학습된 확률 밀도를 정규화 상수까지 해석적으로 명시해야 한다는 점이 공통점입니다. 주목할만한 몇 개의 잠재 변수 층을 가진 흥미로운 생성 모델(예: DBN 및 DBM)에서는 심지어 계산 가능한 비정규화된 확률 밀도를 유도하는 것조차 어려운 경우도 있습니다. 노이즈 제거 오토인코더 [30] 및 컨트랙티브 오토인코더와 같은 몇몇 모델들은 RBM에 적용된 score matching과 매우 유사한 학습 규칙을 가지고 있습니다. NCE에서는 이 작업과 같이 생성 모델을 피팅하기 위해 식별적인 학습 기준이 사용됩니다. 그러나 별도의 식별 모델을 피팅하는 대신에 생성 모델 자체가 고정된 노이즈 분포로부터 생성된 데이터와 샘플들을 구별하는 데 사용됩니다. NCE는 고정된 노이즈 분포를 사용하기 때문에, 모델이 일부 관측 변수에 대해 실질적으로 올바른 분포를 학습한 후에 학습 속도가 크게 느려집니다.

Finally, some techniques do not involve defining a probability distribution explicitly, but rather train a generative machine to draw samples from the desired distribution. This approach has the advantage that such machines can be designed to be trained by back-propagation. Prominent recent work in this area includes the generative stochastic network (GSN) framework [5], which extends generalized denoising auto-encoders [4]: both can be seen as defining a parameterized Markov chain, i.e., one learns the parameters of a machine that performs one step of a generative Markov chain. Compared to GSNs, the adversarial nets framework does not require a Markov chain for sampling. Because adversarial nets do not require feedback loops during generation, they are better able to leverage piecewise linear units [19, 9, 10], which improve the performance of backpropagation but have problems with unbounded activation when used ina feedback loop. More recent examples of training a generative machine by back-propagating into it include recent work on auto-encoding variational Bayes [20] and stochastic backpropagation [24]. 마지막으로, 몇 가지 기법은 확률 분포를 명시적으로 정의하는 대신 원하는 분포에서 샘플을 생성하기 위해 생성 모델을 학습합니다. 이 접근 방식은 이러한 기계가 역전파로 학습될 수 있도록 설계될 수 있다는 장점이 있습니다. 이 분야에서 주목할만한 최근 연구로는 생성적 확률 신경망 (GSN) 프레임워크 [5]가 있으며, 이는 일반화된 노이즈 제거 오토인코더 [4]를 확장한 것으로 볼 수 있습니다. 이 둘은 매개변수화된 마르코프 체인을 정의하는 것으로 볼 수 있으며, 즉, 생성적인 마르코프 체인의 한 단계를 수행하는 기계의 매개변수를 학습합니다. GSN과 비교하여, 적대적 네트워크 프레임워크는 샘플링을 위해 마르코프 체인을 필요로하지 않습니다. 적대적 네트워크는 생성 중에 피드백 루프가 필요하지 않기 때문에, 역전파의 성능을 향상시키는데 도움이 되는 분리 선형 유닛 [19, 9, 10]을 더 잘 활용할 수 있습니다. 생성 기계를 역전파로 학습하는 더 최근의 예로는 오토인코딩 변이 베이즈 [20] 및 확률적 역전파 [24]에 대한 최근 연구가 있습니다.

- 학습 초반에는 G가 생성해내는 이미지는 D가 G가 생성해낸 가짜 샘플인지 실제 데이터의 샘플인지 바로 구별할 수 있을 만큼 형편없기 때문에 D(G(z))의 결과가 0에 가까움.

- 그리고 학습이 진행될수록, G는 실제 데이터의 분포를 모사하면서 D(G(z))의 값이 1이 되도록 발전함

- G는 오른쪽 식을 최소화하는 것이 목적, D는 최대화 하는 것이 목적

2.1 도둑의 관점

- G(z) : 가짜 이미지

- D(G(z)): 가짜 이미지가 들어왔을 때 D가 어떻게 판별할까?

→ 도둑의 경우, 가짜 이미지가 들어왔을 때, 진짜 이미지라고 판단하는 것이 목적임

→ 따라서 D(G(z))가 1이 되는 것이 최종 목표

→ 그렇게 되면 log(1-1)이 됨. 즉, 오른쪽 식이 최소화되는 것이라고 할 수 있음

- D(G(z))가 1이 되는 것이 좋은거

2.2 경찰의 관점

- logD(x)가 1이 되는 것을 목표로 함 → 진짜 데이터가 들어왔을 때 진짜라고 판별해야 하기 때문

- D(G(z)) : 가짜를 최대한 가짜로 구분해야 하기 때문에 0으로 만드는 것이 경찰의 목적. 즉 log(1-0) =0 에 가깝도록 만드는 것이 최종 목표

- D(G(z))가 0이 되는 것이 좋은

- 즉, 최댓값은 0, 최솟값은 마이너스 무한대

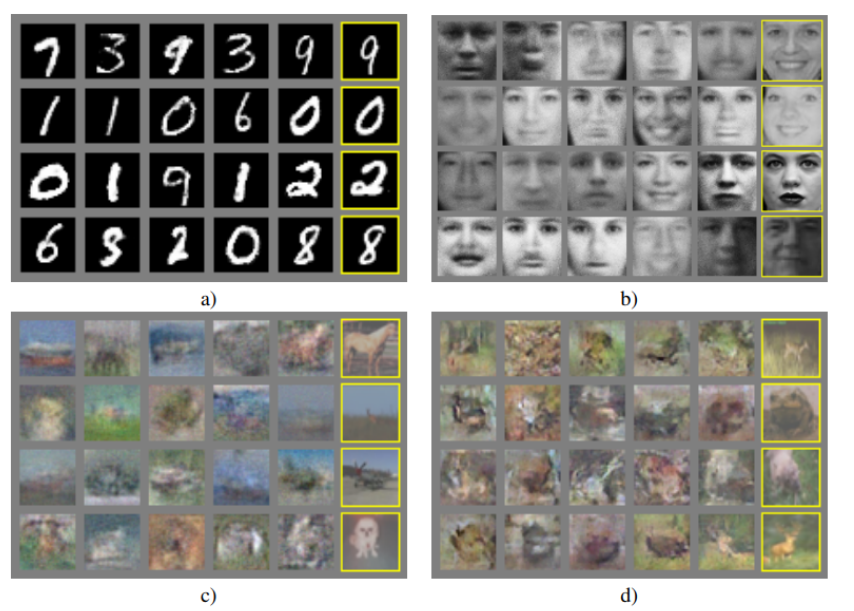

- 파란색 점선: discriminative distribution

- 검은색 점선: data generating distribution(real)

- 녹색 실선: generative distribution(fake)

- z(noise): x(이미지) 공간으로 맵핑이 원래 이미지의 분포와 다른 분포를 생성(fake data)ex) 숫자 데이터 2라고 하게 되면 초반에는 뭉개진 숫자로 나온다는

- (a): 학습초기에는 real과 fake의 분포가 전혀 다름. D의 성능도 썩 좋지 않음

- (b): 학습시킬 때, G 또는 D 중 하나를 고정시켜서 나머지를 학습시킴 (이 때 여기에서는 G를 고정. 이후 D를 학습시킨 것임)D가 (a)처럼 들쑥날쑥하게 확률을 판단하지 않고, real과 fake를 분명하게 판별해 내고 있음을 확인할 수 있. 이는 D가 성능이 올라간 것이라고 말할 수 있음

- (c): 이번에는 D를 고정하고 G를 학습시킴어느정도 D가 학습이 이루어지면, G는 실제 데이터의 분포를 모사하며 D가 구별하기 힘든 방향으로 학습을 함

- (d): 이 과정의 반복의 결과로 real과 fake의 분포가 거의 비슷해져 구분할 수 없을 만큼 G가 학습을 하게 되고 결국, D가 이 둘을 구분할 수 없게 되어 확률을 1/2로 계산하게 됨

3. 이론/증명

3-1) Global Optimality of pg(G출력분포) = pdata(실제data)

- (최적화 구하는 법)

- 어떠한 G가 들어오던지 간에, 최적의 D는 뭘까? ⇒ 위의 식을 가질 때 가장 최적이다! 라는 것을 증명함

- D 입장에서는 V(G,D)를 최대화, G입장에서는 최소

- V(G,D)를 최소화 시키는 최적해가 무엇일까

- D*G(x)를 한 이유는 앞서 D에 대해 한번 maximize를 했기 때문

3-2) Convergence of Algorithm 1

- 학습 구현 방법

- 학습 반복 횟수만큼 반복(에폭)

- 매 에폭당 k번 D 학습한 이후에, G 학습

- D의 학습: m개의 노이즈를 뽑고, m개의 원본 데이터 샘플링 한 후, 경사하강법을 통해 maximize 진행 → 원본데이터 D(x)에 대해서는 1, D(G(z))에 대해서는 0을 출력하도록 학습

- G의 학습: m개의 노이즈를 뽑고 m개의 fake data를 만들고 기울기 값을 낮추는 식으로 학습

4. Experiments

5. 장단점

본문

This new framework comes with advantages and disadvantages relative to previous modeling frameworks. The disadvantages are primarily that there is no explicit representation of pg(x), and that D must be synchronized well with G during training (in particular, G must not be trained too much without updating D, in order to avoid “the Helvetica scenario” in which G collapses too many values of z to the same value of x to have enough diversity to model pdata), much as the negative chains of a Boltzmann machine must be kept up to date between learning steps. The advantages are that Markov chains are never needed, only backprop is used to obtain gradients, no inference is needed during learning, and a wide variety of functions can be incorporated into the model. Table 2 summarizes the comparison of generative adversarial nets with other generative modeling approaches. The aforementioned advantages are primarily computational. Adversarial models may also gain some statistical advantage from the generator network not being updated directly with data examples, but only with gradients flowing through the discriminator. This means that components of the input are not copied directly into the generator’s parameters. Another advantage of adversarial networks is that they can represent very sharp, even degenerate distributions, while methods based on Markov chains require that the distribution be somewhat blurry in order for the chains to be able to mix between modes.

이 새로운 프레임워크는 이전 모델링 프레임워크에 비해 장단점을 가지고 있습니다. 주요한 단점은 명시적으로 pg(x)를 표현하지 않으며, 훈련 중에 D가 G와 잘 동기화되어야 한다는 점입니다. 특히, G를 업데이트하지 않고 너무 많이 훈련하면 G가 충분한 다양성을 가지기 위해 z의 많은 값을 x의 동일한 값으로 축소하는 "Helvetica 시나리오"가 발생할 수 있습니다. 마찬가지로, 볼츠만 머신의 부정적인 체인은 학습 단계 사이에서 최신 상태를 유지해야 합니다. 이러한 단점과는 대조적으로 이 모델의 장점은 마르코프 체인이 필요하지 않으며, 기울기를 얻기 위해 역전파만 사용된다는 것입니다. 학습 중에는 추론이 필요하지 않으며, 다양한 함수를 모델에 통합할 수 있습니다. 표 2는 생성적 적대 신경망과 다른 생성 모델링 접근 방식을 비교한 것을 요약한 것입니다.

언급된 장점들은 주로 계산적인 측면에서입니다. 적대적 모델은 생성자 네트워크가 데이터 예제와 직접적으로 업데이트되지 않고, 판별자를 통해 그래디언트만이 흐른다는 점에서 통계적인 이점을 얻을 수도 있습니다. 이는 입력 구성 요소가 생성자의 매개 변수로 직접 복사되지 않는다는 것을 의미합니다. 적대적 네트워크의 또 다른 장점은 마르코프 체인에 기반한 방법들은 체인이 모드 간에 혼합할 수 있도록 분포가 다소 흐릿해야 한다는 점을 필요로 하지만, 적대적 네트워크는 매우 날카로운, 심지어 퇴화된 분포를 표현할 수 있다는 것입니다.

- 단점

- D와 G가 균형을 잘 맞춰 성능이 향상되어야 함 (G는 D가 너무 발전하기 전에 너무 발전되어서는 안됨. G가 z 데이터를 너무 많이 붕괴시켜버리기 때문)

- 장점

- Markov chains이 전혀 필요 없고 gradients를 얻기 위해 back-propagation만이 사용됨

- Markov chains를 기반으로 하는 방법보다 선명한 이미지를 얻을 수 있음

- 학습 중 어떠한 inference가 필요 없음

- 다양한 함수들이 모델이 접목될 수 있음

※ 마코브 체인

- 마코브 체인은 현재 상태가 이전 상태에만 의존하는 특성을 가지며, 이전 상태를 기반으로 다음 상태를 예측하는 확률 모델

'Deep Learning > [논문] Paper Review' 카테고리의 다른 글

| cGAN/Pix2Pix (0) | 2023.07.07 |

|---|---|

| R-CNN (0) | 2023.07.06 |

| AE (0) | 2023.07.06 |

| SPPNet (0) | 2023.07.06 |

| Faster R-CNN (0) | 2023.07.06 |