728x90

반응형

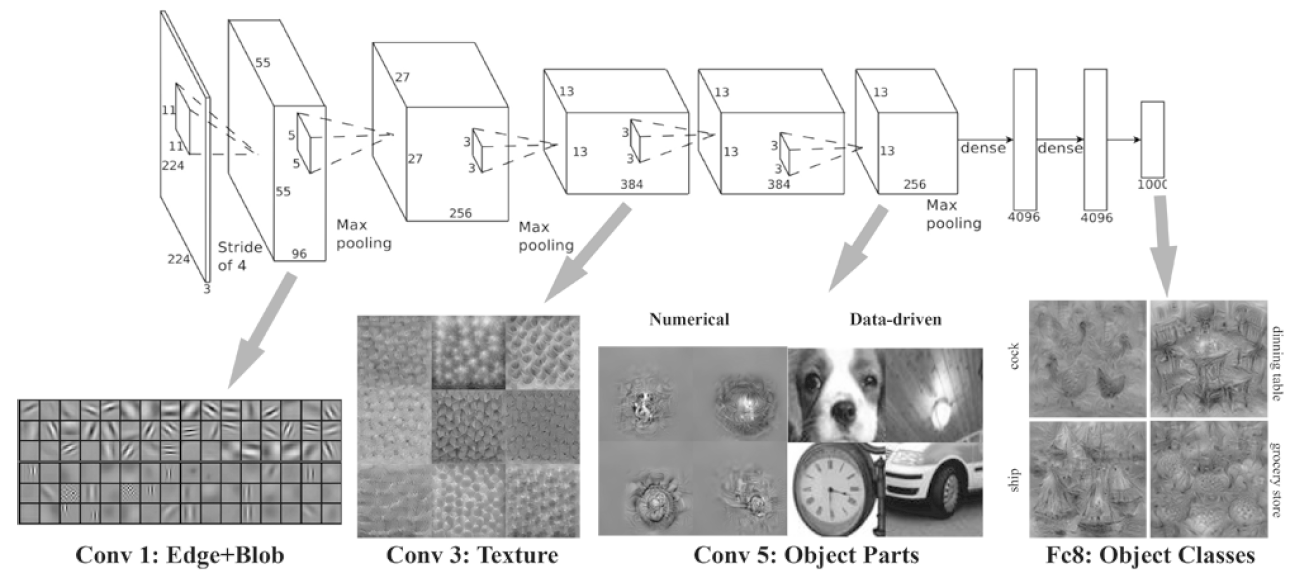

- CNN(합성곱 신경망- convolutional neural network)

- 이미지 인식 + 음성 인식 등 다양한 곳에서 사용됨.

7-1) 전체 구조

7-2) 합성곱 계층

- 입체적인 데이터가 흐른다는 차이점이 있음

7-2-1) 완전연결 계층의 문제점

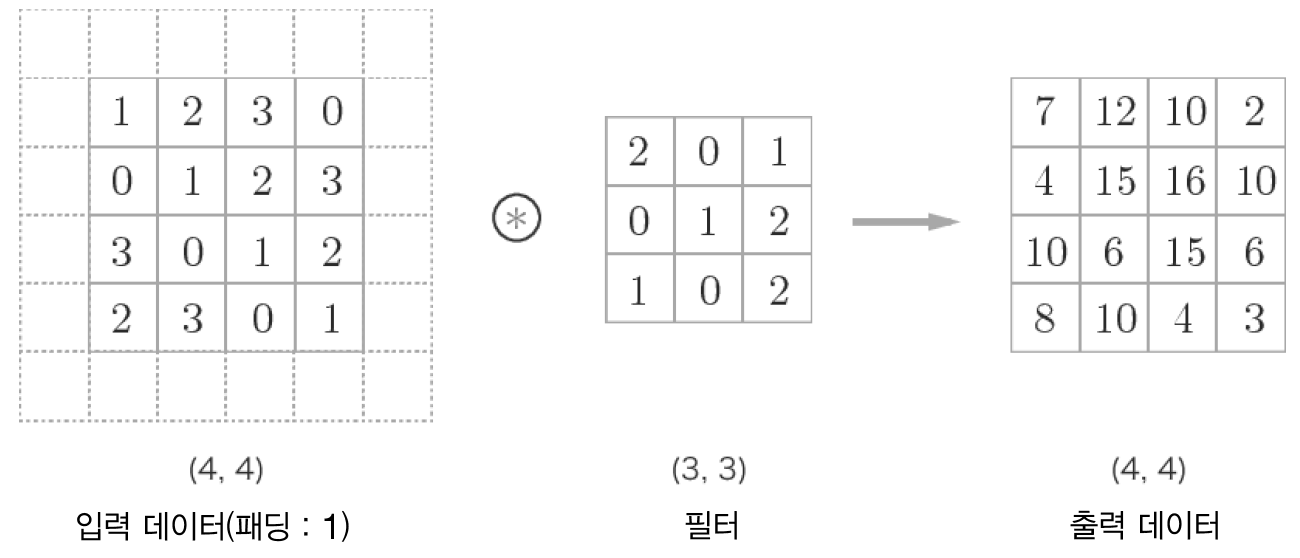

7-2-2) 합성곱 연산

7-2-3) 패딩

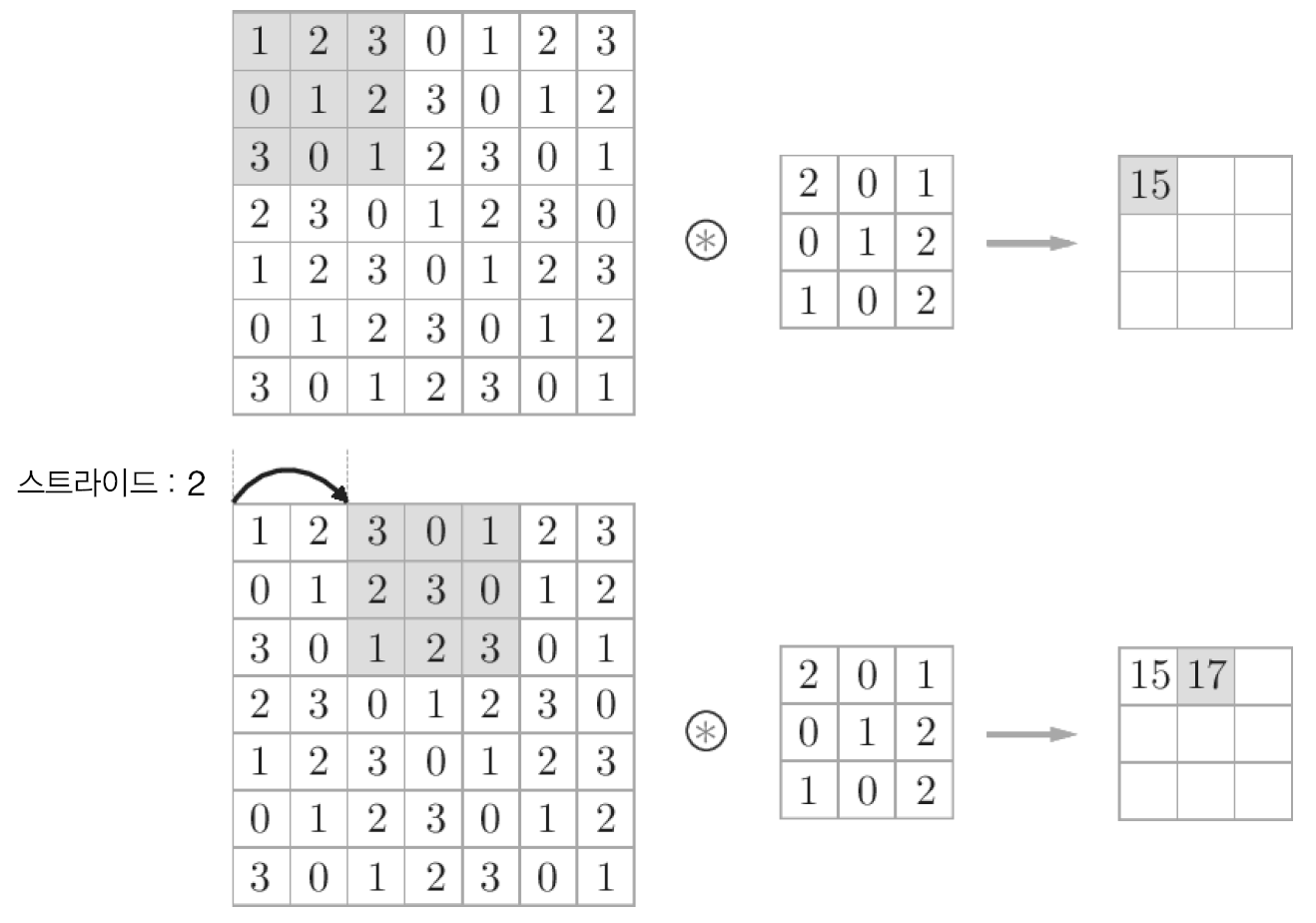

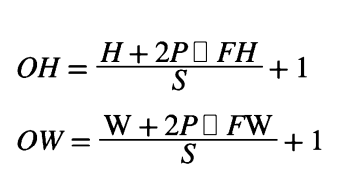

7-2-4) 스트라이드

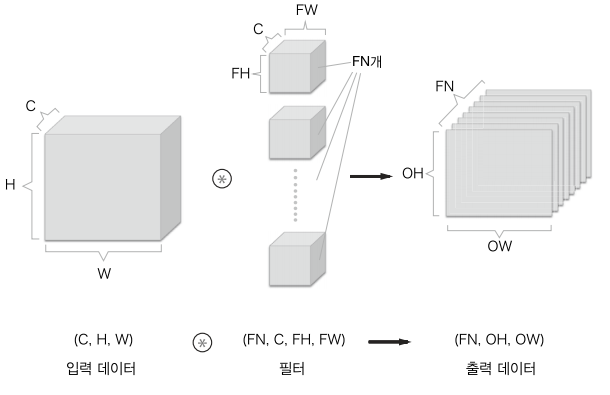

7-2-5) 3차원 데이터의 합성곱 연산

7-2-6) 블록으로 생각하기

7-3) 풀링 계층

7-4) 합성곱/풀링 계층 구현

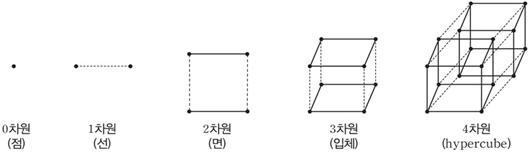

7-4-1) 4차원 배열

- 데이터의 형상이 (10,1,28,28) 이라면 ⇒ 데이터가 10개, 채널 1개, 28*28

- x[0].shape ⇒ (1,28,28) ⇒ 첫 번째 데이터에 접근

- ⇒ im2col이라는 트릭이 문제를 단순하게 만들어줌.

7-4-2) im2col(image to column) 데이터 전개하기

- 이미지를 열로 붙임

7-4-3) 합성곱 계층 구현

# im2col 사용 구현 import sys, os sys.path.append('/deep-learning-from-scratch') from common.util import im2col x1 = np.random.rand(1, 3, 7, 7) # 데이터 수, 채널 수, 높이, 너비 col1 = im2col(x1, 5, 5, stride=1, pad=0) print(col1.shape) x2 = np.random.rand(10, 3, 7, 7) # 데이터 10개 col2 = im2col(x2, 5, 5, stride=1, pad=0) print(col2.shape) >>> (9, 75) (90, 75)- 두 가지 경우 모두 2번째 차원의 원소는 75개

- 필터의 원소 수와 같음 (채널3, 5*5 데이터)

- 배치크기가 1일 때는 (9, 75) 10일 때는 10배인 (90, 75)

# 합성곱 계층 구현 - Convolution 클래스 class Convolution: def __init__(self, W, b, stride=1, pad=0): self.W = W self.b = b self.stride = stride self.pad = pad def forward(self, x): FN, C, FH, FW = self.W.shape N, C, H, W = x.shape out_h = int(1 + (H + 2*self.pad - FH) / self.stride) out_w = int(1 + (W +2*self.pad - FW) / self.stride) col = im2col(x, FH, FW, self.stride, self.pad) # 입력데이터 전개 col_W = self.W.reshape(FN, -1).T # 필터 전개 out = np.dot(col, col_W) + self.b out = out.reshape(N, out_h, out_w, -1).transpose(0, 3, 1, 2) return out- eshape 두 번째 인수 -1로 지정하면 다차원 배열의 원소 수가 변환 후에도 똑같이 유지되도록 묶어줌

- transpose함수를 이용해 출력데이터를 적절한 형상으로 바꾸어 줌

- 인덱스를 지정하여 축의 순서 변경

- 역전파에서는 im2col 대신 col2im 함수 사용

7-4-4) 풀링 계층 구현

- 풀링의 경우에는 채널이 독립적이라는 점이 합성곱계층과 다른 점

- 합성곱 계층의 경우, 각 필터와 데이터를 곱해서 한 칸에 다 작성 but, 풀링의 경우 이어붙임?!

- 전개 후 최댓값 구하고 적절한 형상으로 바꾸어줌

class Pooling: def __init__(self, pool_h, pool_w, stride=1, pad=0): self.pool_h=pool_h self.pool_w=pool_w self.stride=stride self.pad=pad def forward(self, x): n, c, h, w=x.shape out_h=int(1+(h-self.pool_h)/self.stride) out_w=int(1+(w-self.pool_w)/self.stride) #입력 데이터 전개 (1) col=im2col(x, self.pool_h, self.pool_w, self.stride, self.pad) #전개 col=col.reshape(-1, self.pool_h*self.pool_w) #최댓값 (2) out=np.max(col, axis=1) #최댓값 여기서는 축 기준으로의 최댓값 #적절한 모양으로 성형 (3) out=out.reshape(n, out_h, out_w, c).transpose(0, 3, 1, 2) return out

7-5) CNN 구현하기

- CNN 네트워크는

Convolution-ReLU-Pooling-Affine-ReLU-Affine-Softmax순으로 흐름

- 하이퍼 파리미터 설정

class SimpleConvNet: def __init__(self, input_dim=(1, 28, 28), conv_param={'filter_num':30, 'filter_size':5, 'pad':0, 'stride':1}, hidden_size=100, output_size=10, weight_init_std=0.01): filter_num = conv_param['filter_num'] filter_size = conv_param['filter_size'] filter_pad = conv_param['pad'] filter_stride = conv_param['stride'] input_size = input_dim[1] conv_output_size = (input_size - filter_size + 2*filter_pad) / filter_stride + 1 pool_output_size = int(filter_num * (conv_output_size/2) * (conv_output_size/2))Parameters

input_size : 입력 크기(MNIST의 경우엔 784) hidden_size_list : 각 은닉층의 뉴런 수를 담은 리스트(e.g. [100, 100, 100]) output_size : 출력 크기(MNIST의 경우엔 10) activation : 활성화 함수 - 'relu' 혹은 'sigmoid' weight_init_std : 가중치의 표준편차 지정(e.g. 0.01) 'relu'나 'he'로 지정하면 'He 초깃값'으로 설정 'sigmoid'나 'xavier'로 지정하면 'Xavier 초깃값'으로 설정

- pool_output_size = int(filter_num * (conv_output_size/2) * (conv_output_size/2)) ????

- 가중치 초기화

# 가중치 초기화 self.params = {} self.params['W1'] = weight_init_std * \ np.random.randn(filter_num, input_dim[0], filter_size, filter_size) self.params['b1'] = np.zeros(filter_num) self.params['W2'] = weight_init_std * \ np.random.randn(pool_output_size, hidden_size) self.params['b2'] = np.zeros(hidden_size) self.params['W3'] = weight_init_std * \ np.random.randn(hidden_size, output_size) self.params['b3'] = np.zeros(output_size) - 계층 생

# 계층 생성 self.layers = OrderedDict() self.layers['Conv1'] = Convolution(self.params['W1'], self.params['b1'], conv_param['stride'], conv_param['pad']) self.layers['Relu1'] = Relu() self.layers['Pool1'] = Pooling(pool_h=2, pool_w=2, stride=2) self.layers['Affine1'] = Affine(self.params['W2'], self.params['b2']) self.layers['Relu2'] = Relu() self.layers['Affine2'] = Affine(self.params['W3'], self.params['b3']) self.last_layer = SoftmaxWithLoss()- 초기화를 마친 후에는 추론을 수행하는 predict 메서드와 손실함수의 값을 구하는 loss 메서드를 구현할 수 있다.

def predict(self, x): for layer in self.layers.values(): x = layer.forward(x) return x def loss(self, x, t): """손실 함수를 구한다. Parameters ---------- x : 입력 데이터 t : 정답 레이블 """ y = self.predict(x) return self.last_layer.forward(y, t)- 여기까지가 순전파 코드

- 역전파 기울기 구하는 구현

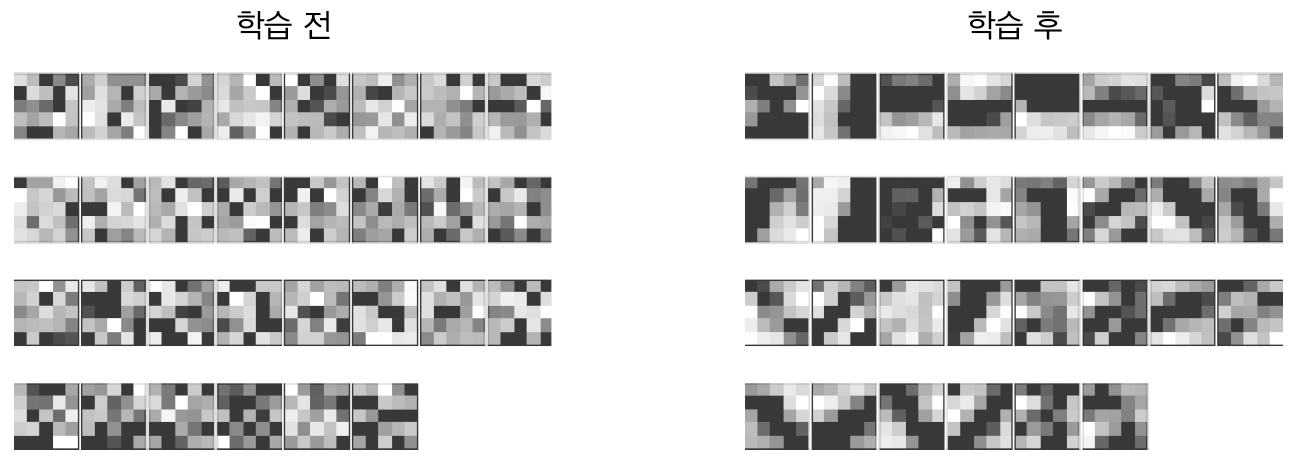

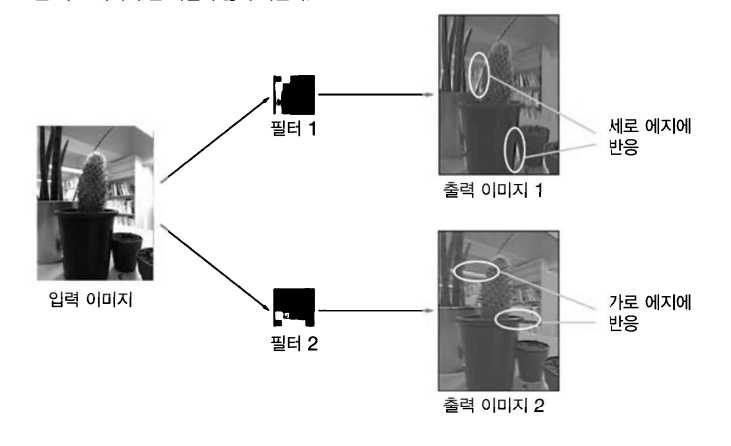

def gradient(self, x, t): """기울기를 구한다(오차역전파법). Parameters ---------- x : 입력 데이터 t : 정답 레이블 Returns ------- 각 층의 기울기를 담은 사전(dictionary) 변수 grads['W1']、grads['W2']、... 각 층의 가중치 grads['b1']、grads['b2']、... 각 층의 편향 """ # forward self.loss(x, t) # backward dout = 1 dout = self.last_layer.backward(dout) layers = list(self.layers.values()) layers.reverse() for layer in layers: dout = layer.backward(dout) # 결과 저장 grads = {} grads['W1'], grads['b1'] = self.layers['Conv1'].dW, self.layers['Conv1'].db grads['W2'], grads['b2'] = self.layers['Affine1'].dW, self.layers['Affine1'].db grads['W3'], grads['b3'] = self.layers['Affine2'].dW, self.layers['Affine2'].db return grads7-6) CNN 시각화

728x90

반응형

'Deep Learning > 2023 DL 기초 이론 공부' 카테고리의 다른 글

| [밑바닥부터 시작하는 딥러닝 1] chap6(학습 관련 기술들) (0) | 2023.07.08 |

|---|---|

| [파이썬 딥러닝 파이토치] Part2 (0) | 2023.07.08 |

| [밑바닥부터 시작하는 딥러닝 1] chap8 딥러닝 (0) | 2023.07.08 |

| [파이썬 딥러닝 파이토치] Part3 (0) | 2023.07.08 |

| [파이썬 딥러닝 파이토치] Part5 (0) | 2023.07.08 |