- Inception-v1

- Inception-v2

- inception-v3

- Inception-v2 구조에서 위에서 설명한 기법들을 하나하나 추가해 성능을 측정하고, 모든 기법들을 적용하여 최고 성능을 나타내는 모델이 Inception-v3

- Inception-v3은 Inception-v2에서 BN-auxiliary + RMSProp + Label Smoothing + Factorized 7x7 을 다 적용한 모델

<inception v2, v3 참고>

0. Abstract

Very deep convolutional networks have been central to the largest advances in image recognition performance in recent years. One example is the Inception architecture that has been shown to achieve very good performance at relatively low computational cost. Recently, the introduction of residual connections in conjunction with a more traditional architecture has yielded state-of-the-art performance in the 2015 ILSVRC challenge; its performance was similar to the latest generation Inception-v3 network. This raises the question of whether there are any benefit in combining the Inception architecture with residual connections. Here we give clear empirical evidence that training with residual connections accelerates the training of Inception networks significantly. There is also some evidence of residual Inception networks outperforming similarly expensive Inception networks without residual connections by a thin margin. We also present several new streamlined architectures for both residual and non-residual Inception networks. These variations improve the single-frame recognition performance on the ILSVRC 2012 classification task significantly. We further demonstrate how proper activation scaling stabilizes the training of very wide residual Inception networks. With an ensemble of three residual and one Inception-v4, we achieve 3.08% top-5 error on the test set of the ImageNet classification (CLS) challenge. 최근 몇 년간 이미지 인식 성능에서 가장 큰 진보를 이루는 데에는 매우 깊은 합성곱 신경망이 중요한 역할을 했습니다. 이중 하나의 예는 Inception 아키텍처인데, 상대적으로 낮은 계산 비용으로 매우 좋은 성능을 달성하는 것으로 입증되었습니다. 최근에는 전통적인 아키텍처와 함께 잔차 연결(residual connections)을 도입하여 2015 ILSVRC 챌린지에서 최첨단 성능을 내면서 Inception-v3 네트워크와 유사한 성능을 보였습니다. 이로 인해 Inception 아키텍처와 잔차 연결을 결합하는 것에 어떤 이점이 있는지 의문이 제기되었습니다. 이곳에서는 잔차 연결을 사용한 학습이 Inception 네트워크의 학습을 크게 가속화한다는 명확한 경험적 증거를 제시합니다. 또한 잔차 Inception 네트워크가 잔차 연결이 없는 유사한 비용의 Inception 네트워크보다 약간 우수한 성능을 내는 일부 증거도 제시합니다. 또한 잔차 및 비-잔차 Inception 네트워크에 대한 몇 가지 새로운 간소화된 아키텍처를 제시합니다. 이러한 변형은 ILSVRC 2012 분류 작업에서 단일 프레임 인식 성능을 크게 향상시킵니다. 또한 매우 넓은 잔차 Inception 네트워크의 학습을 안정화하기 위해 적절한 활성화 스케일링이 어떻게 도움이 되는지도 설명합니다. 잔차 3개와 Inception-v4 1개의 앙상블로 ImageNet 분류(CL) 챌린지의 테스트 세트에서 3.08%의 상위 5% 오류율을 달성합니다.

1. Introduction

Since the 2012 ImageNet competition [11] winning entry by Krizhevsky et al [8], their network “AlexNet” has been successfully applied to a larger variety of computer vision tasks, for example to object-detection [4], segmentation [10], human pose estimation [17], video classification [7], object tracking [18], and superresolution [3]. These examples are but a few of all the applications to which deep convolutional networks have been very successfully applied ever since. In this work we study the combination of the two most recent ideas: Residual connections introduced by He et al. in [5] and the latest revised version of the Inception architecture [15]. In [5], it is argued that residual connections are of inherent importance for training very deep architectures. Since Inception networks tend to be very deep, it is natural to replace the filter concatenation stage of the Inception architecture with residual connections. This would allow Inception to reap all the benefits of the residual approach while retaining its computational efficiency. Besides a straightforward integration, we have also studied whether Inception itself can be made more efficient by making it deeper and wider. For that purpose, we designed a new version named Inception-v4 which has a more uniform simplified architecture and more inception modules than Inception-v3. Historically, Inception-v3 had inherited a lot of the baggage of the earlier incarnations. The technical constraints chiefly came from the need for partitioning the model for distributed training using DistBelief [2]. Now, after migrating our training setup to TensorFlow [1] these constraints have been lifted, which allowed us to simplify the architecture significantly. The details of that simplified architecture are described in Section 3. 자그레프스키 등이 2012 ImageNet 대회에서 우승한 "AlexNet" 이후로, 그들의 네트워크인 "AlexNet"은 객체 감지 [4], 세분화 [10], 인간 자세 추정 [17], 비디오 분류 [7], 객체 추적 [18], 초해상도 [3] 등 다양한 컴퓨터 비전 작업에 성공적으로 적용되었습니다. 이러한 예는 매우 깊은 합성곱 신경망이 이후로 매우 성공적으로 적용된 응용 프로그램 중 일부에 불과합니다. 이 연구에서는 He 등이 [5]에서 제안한 잔차 연결과 Inception 아키텍처의 최신 수정 버전을 조합하는 것을 연구합니다. [5]에서는 잔차 연결이 매우 깊은 아키텍처의 학습에 있어서 중요한 역할을 한다는 주장이 있습니다. Inception 네트워크는 매우 깊기 때문에 Inception 아키텍처의 필터 연결 단계를 잔차 연결로 대체하는 것이 자연스럽습니다. 이렇게 하면 Inception은 잔차 접근법의 모든 이점을 취하면서 계산 효율성을 유지할 수 있습니다. 단순한 통합뿐만 아니라, 우리는 Inception 자체를 더 효율적으로 만들 수 있는지에 대해서도 연구했습니다. 이를 위해 Inception-v3보다 더 균일하고 간소화된 아키텍처와 더 많은 Inception 모듈을 가진 Inception-v4라는 새 버전을 설계했습니다. 역사적으로 Inception-v3는 이전 버전의 무거운 요소를 상속받았습니다. 기술적인 제약 사항은 주로 DistBelief [2]를 사용하여 분산 학습을 위해 모델을 분할해야 했기 때문입니다. 이제 훈련 설정을 TensorFlow [1]로 마이그레이션한 후에는 이러한 제약 사항이 해제되어 아키텍처를 크게 단순화할 수 있었습니다. 그 단순화된 아키텍처의 세부 사항은 섹션 3에서 설명됩니다.

In this report, we will compare the two pure Inception variants, Inception-v3 and v4, with similarly expensive hybrid Inception-ResNet versions. Admittedly, those models were picked in a somewhat ad hoc manner with the main constraint being that the parameters and computational complexity of the models should be somewhat similar to the cost of the non-residual models. In fact we have tested bigger and wider Inception-ResNet variants and they performed very similarly on the ImageNet classification challenge [11] dataset. The last experiment reported here is an evaluation of an ensemble of all the best performing models presented here. As it was apparent that both Inception-v4 and InceptionResNet-v2 performed similarly well, exceeding state-ofthe art single frame performance on the ImageNet validation dataset, we wanted to see how a combination of those pushes the state of the art on this well studied dataset. Surprisingly, we found that gains on the single-frame performance do not translate into similarly large gains on ensembled performance. Nonetheless, it still allows us to report 3.1% top-5 error on the validation set with four models ensembled setting a new state of the art, to our best knowledge. In the last section, we study some of the classification failures and conclude that the ensemble still has not reached the label noise of the annotations on this dataset and there is still room for improvement for the predictions. 이 보고서에서는 순수 Inception 버전인 Inception-v3와 v4를 비슷한 비용의 하이브리드 Inception-ResNet 버전과 비교합니다. 솔직히 말하자면, 이러한 모델은 다소 임의적인 방식으로 선택되었으며, 모델의 매개변수와 계산 복잡성이 비-잔차 모델의 비용과 어느 정도 유사해야 한다는 주요 제약 조건을 가지고 선택되었습니다. 사실, 더 크고 넓은 Inception-ResNet 변형을 테스트했고, 이들은 ImageNet 분류 챌린지 [11] 데이터셋에서 매우 유사한 성능을 보였습니다. 여기서 보고된 마지막 실험은 모든 최상의 성능을 보여주는 모델들의 앙상블 평가입니다. Inception-v4와 InceptionResNet-v2가 모두 매우 우수한 성능을 보였고 ImageNet 검증 데이터셋에서 최첨단의 단일 프레임 성능을 초과했다는 것이 분명했기 때문에, 이들을 조합한 결과가 이 잘 연구된 데이터셋에서 어떻게 최첨단을 밀어올리는지를 확인하고자 했습니다. 놀랍게도, 단일 프레임 성능의 향상이 앙상블 성능에서도 비슷한 규모의 향상으로 이어지지는 않았습니다. 그럼에도 불구하고, 우리는 4개 모델을 앙상블하여 검증 세트에서 3.1%의 상위 5개 오류율을 보고하며 새로운 최첨단을 달성한 것으로 알고 있습니다. 마지막 섹션에서는 일부 분류 실패를 연구하고, 아직 이 앙상블이 주석의 레이블 잡음에 도달하지 못했으며 예측을 개선할 여지가 있다는 결론을 내립니다.

2. Related Work

Convolutional networks have become popular in large scale image recognition tasks after Krizhevsky et al. [8]. Some of the next important milestones were Network-innetwork [9] by Lin et al., VGGNet [12] by Simonyan et al. and GoogLeNet (Inception-v1) [14] by Szegedy et al. Residual connection were introduced by He et al. in [5] in which they give convincing theoretical and practical evidence for the advantages of utilizing additive merging of signals both for image recognition, and especially for object detection. The authors argue that residual connections are inherently necessary for training very deep convolutional models. Our findings do not seem to support this view, at least for image recognition. However it might require more measurement points with deeper architectures to understand the true extent of beneficial aspects offered by residual connections. In the experimental section we demonstrate that it is not very difficult to train competitive very deep networks without utilizing residual connections. However the use of residual connections seems to improve the training speed greatly, which is alone a great argument for their use. The Inception deep convolutional architecture was introduced in [14] and was called GoogLeNet or Inception-v1 in our exposition. Later the Inception architecture was refined in various ways, first by the introduction of batch normalization [6] (Inception-v2) by Ioffe et al. Later the architecture was improved by additional factorization ideas in the third iteration [15] which will be referred to as Inception-v3 in this report. 합성곱 신경망은 Krizhevsky et al. [8] 이후로 대규모 이미지 인식 작업에서 인기를 얻었습니다. 그 이후 중요한 성과로는 Lin et al.의 Network-in-Network [9], Simonyan et al.의 VGGNet [12], Szegedy et al.의 GoogLeNet (Inception-v1) [14] 등이 있습니다. Residual connection은 He et al. [5]에서 소개되었으며, 이미지 인식 및 특히 객체 탐지에 대한 이점을 설득력있는 이론적 및 실용적 증거로 제시했습니다. 저자들은 residual connection이 매우 깊은 컨볼루션 모델의 학습에 필수적이라 주장합니다. 하지만 우리의 연구 결과는 이러한 주장을 지지하지 않습니다, 적어도 이미지 인식에 대해서는 그렇습니다. 그러나 보다 깊은 아키텍처에서 더 많은 측정 지점이 필요할 수 있어 residual connection이 제공하는 이점의 실제 범위를 이해하기 위해서입니다. 실험 섹션에서 우리는 residual connection을 사용하지 않고도 경쟁력있는 매우 깊은 네트워크를 학습하는 것은 어렵지 않다는 것을 보여줍니다. 그러나 residual connection의 사용은 학습 속도를 크게 향상시키기 때문에 그 자체로 좋은 이유입니다. Inception 깊은 합성곱 아키텍처는 [14]에서 소개되었으며, 우리는 이를 GoogLeNet 또는 Inception-v1로 지칭합니다. 이후 Inception 아키텍처는 여러 가지 방식으로 개선되었습니다. 우선 Ioffe et al.에 의해 배치 정규화 (Inception-v2) [6]가 도입되었습니다. 그 후 추가적인 인수분해 기법을 통해 아키텍처가 세 번째로 개선되었으며, 이를 Inception-v3로 참조할 것입니다.

3. Architectural Choices

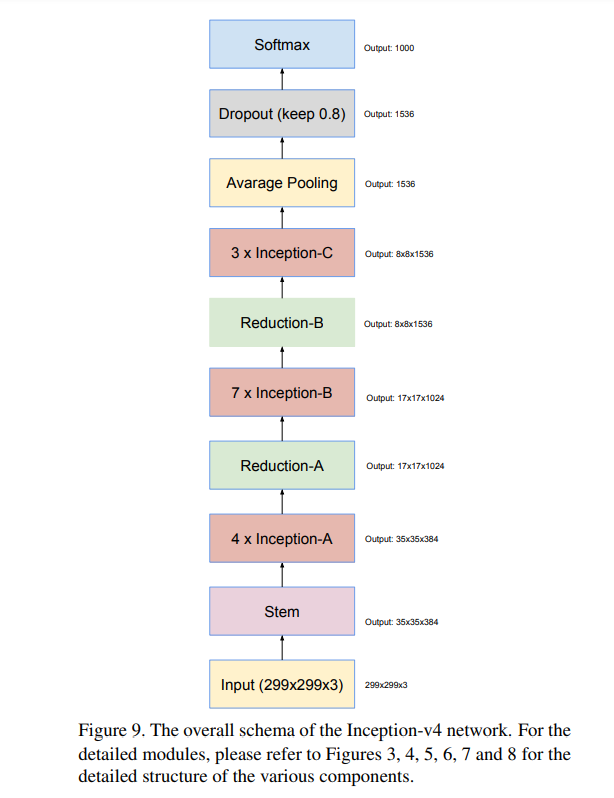

3.1. Pure Inception blocks Our older Inception models used to be trained in a partitioned manner, where each replica was partitioned into a multiple sub-networks in order to be able to fit the whole model in memory. However, the Inception architecture is highly tunable, meaning that there are a lot of possible changes to the number of filters in the various layers that do not affect the quality of the fully trained network. In order to optimize the training speed, we used to tune the layer sizes carefully in order to balance the computation between the various model sub-networks. In contrast, with the introduction of TensorFlow our most recent models can be trained without partitioning the replicas. This is enabled in part by recent optimizations of memory used by backpropagation, achieved by carefully considering what tensors are needed for gradient computation and structuring the compu- tation to reduce the number of such tensors. Historically, we have been relatively conservative about changing the architectural choices and restricted our experiments to varying isolated network components while keeping the rest of the network stable. Not simplifying earlier choices resulted in networks that looked more complicated that they needed to be. In our newer experiments, for Inception-v4 we decided to shed this unnecessary baggage and made uniform choices for the Inception blocks for each grid size. Plase refer to Figure 9 for the large scale structure of the Inception-v4 network and Figures 3, 4, 5, 6, 7 and 8 for the detailed structure of its components. All the convolutions not marked with “V” in the figures are same-padded meaning that their output grid matches the size of their input. Convolutions marked with “V” are valid padded, meaning that input patch of each unit is fully contained in the previous layer and the grid size of the output activation map is reduced accordingly. 3.1. 순수 Inception 블록 이전의 Inception 모델은 각 복제본이 전체 모델을 메모리에 맞출 수 있도록 여러 개의 하위 네트워크로 분할되어 훈련되었습니다. 그러나 Inception 아키텍처는 매우 조정 가능하며, 각 레이어의 필터 수에 대한 다양한 변경 사항은 완전히 훈련된 네트워크의 품질에 영향을 주지 않습니다. 훈련 속도를 최적화하기 위해 이전에는 계산을 다양한 모델 하위 네트워크 간에 균형있게 분배하기 위해 레이어 크기를 신중하게 조정했습니다. 그러나 TensorFlow의 도입으로 인해 최근의 대부분의 모델은 복제본을 분할하지 않고 훈련될 수 있게 되었습니다. 이는 역전파에 사용되는 메모리의 최적화를 최근에 이루어지며, 그레이디언트 계산에 필요한 텐서와 그 구조를 신중하게 고려하여 해당 텐서의 수를 줄임으로써 달성되었습니다. 역사적으로 우리는 아키텍처적 선택을 변경하는 데 상대적으로 보수적이었으며, 네트워크의 나머지 부분을 안정적으로 유지하면서 고립된 네트워크 구성 요소를 변화시키는 실험에 제한을 두었습니다. 이전의 선택을 단순화하지 않은 것은 필요 이상으로 복잡해 보이는 네트워크를 만들어내게 되었습니다. Inception-v4의 경우, 우리는 이러한 불필요한 부분을 제거하고 각 그리드 크기에 대해 Inception 블록에 대해 일관된 선택을 하기로 결정했습니다. Inception-v4 네트워크의 대규모 구조는 Figure 9를 참조하고, 구성 요소의 자세한 구조는 Figure 3, 4, 5, 6, 7 및 8을 참조하십시오. 그림에서 "V"로 표시되지 않은 모든 컨볼루션은 same padding되어 입력과 출력의 그리드 크기가 일치함을 의미합니다. "V"로 표시된 컨볼루션은 valid padding되어 각 유닛의 입력 패치가 이전 레이어에 완전히 포함되고 출력 활성화 맵의 그리드 크기가 그에 따라 줄어듭니다. 3.2. Residual Inception Blocks For the residual versions of the Inception networks, we use cheaper Inception blocks than the original Inception. Each Inception block is followed by filter-expansion layer (1 × 1 convolution without activation) which is used for scaling up the dimensionality of the filter bank before the addition to match the depth of the input. This is needed to compensate for the dimensionality reduction induced by the Inception block. We tried several versions of the residual version of Inception. Only two of them are detailed here. The first one “Inception-ResNet-v1” roughly the computational cost of Inception-v3, while “Inception-ResNet-v2” matches the raw cost of the newly introduced Inception-v4 network. See Figure 15 for the large scale structure of both varianets. (However, the step time of Inception-v4 proved to be significantly slower in practice, probably due to the larger number of layers.) Another small technical difference between our residual and non-residual Inception variants is that in the case of Inception-ResNet, we used batch-normalization only on top of the traditional layers, but not on top of the summations. It is reasonable to expect that a thorough use of batchnormalization should be advantageous, but we wanted to keep each model replica trainable on a single GPU. It turned out that the memory footprint of layers with large activation size was consuming disproportionate amount of GPUmemory. By omitting the batch-normalization on top of those layers, we were able to increase the overall number of Inception blocks substantially. We hope that with better utilization of computing resources, making this trade-off will become unecessary. Inception 네트워크의 잔차 버전에서는 원래의 Inception보다 비용이 적은 Inception 블록을 사용합니다. 각 Inception 블록 뒤에는 차원을 늘리기 위해 활성화 함수가 없는 1 × 1 합성곱 계층(filter-expansion layer)이 추가됩니다. 이는 Inception 블록에 의해 감소된 차원을 보상하기 위해 입력의 깊이와 일치하도록 필터 뱅크의 차원을 확장하는 데 사용됩니다. Inception의 잔차 버전을 몇 가지 시도해보았습니다. 여기서는 두 가지만 자세히 설명합니다. 첫 번째는 "Inception-ResNet-v1"으로 Inception-v3의 계산 비용과 대략적으로 동일하며, 두 번째는 "Inception-ResNet-v2"로 새롭게 소개된 Inception-v4 네트워크의 원시 비용과 일치합니다. 각 변형의 전체 구조는 Figure 15에서 확인할 수 있습니다. (그러나 Inception-v4의 스텝 시간은 실제로 상당히 느리게 나타났는데, 이는 아마 더 많은 레이어 때문일 것입니다.) 잔차 및 비잔차 Inception 변형의 또 다른 작은 기술적 차이점은 Inception-ResNet의 경우 전통적인 레이어 위에만 배치 정규화(batch-normalization)를 사용했지만 합산 연산 위에는 사용하지 않았습니다. 배치 정규화를 철저히 사용하는 것이 유리할 것으로 예상되지만, 각 모델 복제본을 하나의 GPU에서 학습 가능하도록 유지하고자 했습니다. 큰 활성화 크기를 갖는 레이어의 메모리 풋프린트가 불필요하게 많은 GPU 메모리를 소비하는 것으로 나타났습니다. 따라서 해당 레이어 위에 배치 정규화를 생략함으로써 전체적으로 Inception 블록의 수를 상당히 늘릴 수 있었습니다. 우리는 컴퓨팅 자원을 더 잘 활용함으로써 이러한 절충안이 불필요해질 것을 기대합니다.

3.3. Scaling of the Residuals Also we found that if the number of filters exceeded 1000, the residual variants started to exhibit instabilities and the network has just “died” early in the training, meaning that the last layer before the average pooling started to produce only zeros after a few tens of thousands of iterations. This could not be prevented, neither by lowering the learning rate, nor by adding an extra batch-normalization to this layer. We found that scaling down the residuals before adding them to the previous layer activation seemed to stabilize the training. In general we picked some scaling factors between 0.1 and 0.3 to scale the residuals before their being added to the accumulated layer activations (cf. Figure 20). A similar instability was observed by He et al. in [5] in the case of very deep residual networks and they suggested a two-phase training where the first “warm-up” phase is done with very low learning rate, followed by a second phase with high learning rata. We found that if the number of filters is very high, then even a very low (0.00001) learning rate is not sufficient to cope with the instabilities and the training with high learning rate had a chance to destroy its effects. We found it much more reliable to just scale the residuals. Even where the scaling was not strictly necessary, it never seemed to harm the final accuracy, but it helped to stabilize the training. 또한, 필터의 수가 1000을 초과하면 잔차 버전에서 불안정성이 나타나며 네트워크가 학습 초기에 "죽어"버립니다. 이는 평균 풀링 이전의 마지막 레이어가 몇 만 번의 반복 후에 0만을 출력하도록 되는 것을 의미합니다. 이는 학습률을 낮추거나 이 레이어에 추가적인 배치 정규화를 추가함으로써 방지할 수 없었습니다. 우리는 이전 레이어 활성화에 잔차를 더하기 전에 잔차를 스케일링하는 것이 학습을 안정화시키는 것으로 보였습니다. 일반적으로 잔차를 누적된 레이어 활성화에 더하기 전에 스케일링 팩터를 0.1에서 0.3 사이에서 선택했습니다(참조: Figure 20). He et al. [5]에서도 매우 깊은 잔차 네트워크의 경우 비슷한 불안정성이 관찰되었고, 매우 낮은 학습률로 첫 번째 "웜업" 단계를 거친 후 높은 학습률로 두 번째 단계를 진행하는 두 단계 학습을 제안했습니다. 우리는 필터 수가 매우 높은 경우 심지어 매우 낮은 (0.00001) 학습률로도 불안정성을 처리하기에는 충분하지 않으며, 높은 학습률로 학습하는 것이 그 효과를 파괴할 수 있는 가능성이 있다고 발견했습니다. 우리는 잔차를 단순히 스케일링하는 것이 훨씬 신뢰할 수 있음을 알게 되었습니다. 스케일링이 엄격히 필요하지 않은 경우에도 최종 정확도에는 영향을 주지 않는 것으로 보였지만, 학습을 안정화하는 데 도움이 되었습니다.

4. Training Methodology

We have trained our networks with stochastic gradient utilizing the TensorFlow [1] distributed machine learning system using 20 replicas running each on a NVidia Kepler GPU. Our earlier experiments used momentum [13] with a decay of 0.9, while our best models were achieved using RMSProp [16] with decay of 0.9 and = 1.0. We used a learning rate of 0.045, decayed every two epochs using an exponential rate of 0.94. Model evaluations are performed using a running average of the parameters computed over time. 우리는 TensorFlow [1] 분산 기계 학습 시스템을 활용하여 20개의 복제본을 사용하여 각각 NVidia Kepler GPU에서 학습을 진행했습니다. 초기 실험에서는 모멘텀 [13]을 사용하여 감소율을 0.9로 설정했으며, 우리의 최상의 모델은 감소율이 0.9이고 = 1.0인 RMSProp [16]를 사용하여 얻었습니다. 학습률은 0.045로 설정하고, 지수적 감소율 0.94로 두 번의 에포크마다 감소하도록 했습니다. 모델 평가는 시간에 따라 계산된 매개변수의 이동 평균을 사용하여 수행합니다.

0. Abstract/Intro

- 기존 인셉션 모델에 residual connections을 결합하여 빠른 학습이 가능

- residual connections 한 Inception v4가 기존 Inception보다 성능이 조금 더 나음

- Inception-v4는 Inception 신경망을 좀 더 효과적으로 넓고 깊게 만들기 위해 고안됨

- Inception-v3보다 단순하고 획일화된 구조와 더 많은 Inception module을 사용

- Inception-ResNet은 Inception-v4에 residual connection을 결합한 것 → 학습 속도가 빨라짐

- inception-resnet-v1은 inception-v3와 연산량이 비슷함

- inception-resnet-v2는 inception-v4와 연상량이 비슷함

⇒ 크게 Inception v4, Inception-ResNet(inception v4 + ResNet) 모델을 설명함

Inception-v4 vs Inception-ResNet

1. Inception-v4 architecture

1-1. Stem

- Conv, Maxpool 등의 layer이후에는 차원을 맞춰주기 위해 padding이 필요

- V 표시가 있는 것은 패딩 적용 x

- V 표시가 없는 것은 zero padding 적용 (입출력 크기 동일하게)

https://ardino-lab.com/padding-valid-padding-full-padding-same-padding/

https://ardino-lab.com/padding-valid-padding-full-padding-same-padding/1-2. Inception-A

1-3. Inception-B

1-4. Inception-C

1-5. Reduction-A

1-6. Reduction-B

2. Inception + ResNet

- Inception network와 residual block을 결합한 Inception-ResNet

- Inception-ResNet은 v1버전과 v2버전이 존재

- v1와 v2는 전체 구조는 같지만, 각 모듈에 차이점이 존재

- stem와 각 inception module에서 사용하는 filter수가 다름

2-1. Stem

- V2의 경우, 기존 inception-v4에서 사용하는 stem을 활용

2-2. Inception-ResNet-A

- 마지막 1x1 conv에서 채널수가 다름

- 마지막 1x1 conv에서 숫자 뒤에 "Linear"는 활성화 함수를 거치지 않았다는 것을 의미함

2-3. Inception-ResNet-B

2-4. Inception-ResNet-C

2-5. Reduction-A

- v1, v2 둘 다 같은 reduction-A 모듈 사용

2-6. Reduction-B

3. Results

(1) inception-v3와 inception-resnet-v1 학습 곡선 비교

- inception-v3와 inception-resnet-v1의 연산량이 비슷하기 때문에, 둘을 비교

(2) inception-v4와 inception-resnet-v2 학습 곡선 비교

- inception-v4와 inception-resnet-v2의 연산량이 비슷하기 때문에 둘을 비교

(3) 성능 비교

결론: Inception-ResNet-v2가 제일 좋은 성능을 보임

'Deep Learning > [논문] Paper Review' 카테고리의 다른 글

| CycleGAN (0) | 2023.07.05 |

|---|---|

| XLNet: Generalized Autoregressive Pretraining for Language Understanding (1) | 2023.07.05 |

| Seq2Seq (0) | 2023.07.05 |

| U-Net (0) | 2023.07.05 |

| Bert (0) | 2023.07.05 |